TL,DR: GitHub Repo for TCE Demo appliance

Motivation

I was quite excited when VMware released Tanzu Community Edition. It is a free and open source distribution of VMware Tanzu, featuring Cluster-API and other amazing stuff. It was just what I needed to further expand my Kubernetes-fu and it is suitable sized for any home-lab.

However, while TCE itself is easy enough to deploy, I had some challenges with the prerequisites for Docker and other components on my workstation(s).

An appliance seemed like a good solution for my problems and I figured other people might find this useful as well. Posting this idea in our company Slack, I learned from William about his TKG demo appliance and he shared his baseline work with me. Based on this strong foundation I implemented a set of modernizations and changes to release this TCE demo appliance.

Credits

A huge shout out to the amazing William Lam for setting me up here!

Kudos to my colleague Erik Boettcher for finding the first set of bugs :-)

What you get with the TCE demo appliance

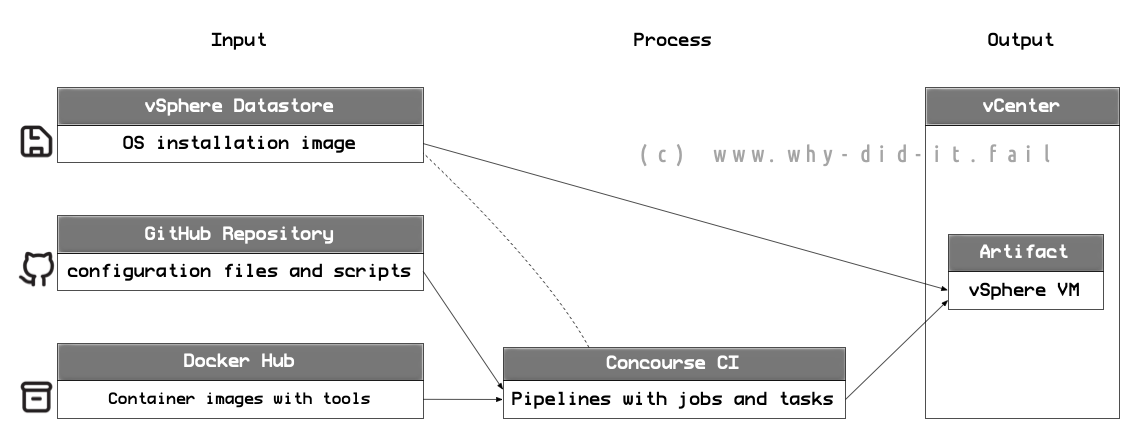

The basic idea for this solution is to provide a Virtual Appliance that pre-bundles (nearly) all required dependencies for deploying Tanzu Community Edition (TCE) managed clusters. Having said that, the virtual appliance cannot provide any specific requirements for the target platform (infrastructure provider). You will find all the details about prerequisites in the documentation and in case of vSphere this includes things like DHCP on the network, providing the OS templates, etc.

Personally, I have deployed this appliance to my vSphere environment but others managed to deploy the appliance on VMware Fusion and Workstation as well.

So, in a nutshell:

You prepare the target platform, deploy the appliance and point your browser towards the UI - off you go!.

A look into the appliance

Inside the appliance the Tanzu CLI is launched as “tanzu bootstrap” service, courtesy of systemd. By default, the installer UI will listen to port 5555 and is accessible using HTTP, just like in the default deployment process on your local workstation. If needed, you can specify another TCP port during the deployment of the appliance.

As HTTP puts your credentials in clear-text over the network, I added an additional reverse proxy to the appliance. Using envoy, you can connect with HTTPS on port 5556 to add transport layer security.

As of today, there is one small gotcha using the HTTPS connection:

When you launch the installation process over https/the secure port, the log output in the web browser is blank.

The logs are streamed using Gorilla WebSocket which has multiple issues when being proxied through https.

However, the installation continues in the background and you can monitor the progress from the shell (for instance via SSH) by using journalctl -fl, read the logs at /var/log/tanzu-ce.log, or use the non-secure port (5555) to view the output.

Workflow (vSphere example)

Before I walk you through an example deployment using static IP assignment I recommend you to read through the “Before you begin” section of the documentation. Here

I am using the vSphere Client to go through the individual steps but you can also use GOVC/PowerCLI to deploy the OVA in a single step.

For this example I prepared my vSphere environment. The networks are ready and a DHCP server is running inside of my first VLAN port group (VLAN 201) for the future TCE clusters. Also, I have added the PhotonOS and Ubuntu template to my vCenter as shown in the screenshot.

The “Deploy OVF temlplate…"-dialogue can be launched from pretty everywhere in the vSphere Client, hence I am not adding an extra screenshot. Since the OVA file is already on my workstation I select the local file option.

As far as naming goes, I leave the default name but put the VM into a folder.

I chose to deploy the appliance at vSphere cluster-level and let DRS pick a suitable host.

My appliance is deployed on vSAN into a port group reserved for VMs with static IP assignment.

I recommend to check the Customization settings:

- The only mandatory input is a root password.

- If you leave the IP address blank, the system will default to DHCP

- If you use DHCP, the system will use the DNS server from the DHCP configuration

- Leave the proxy settings blank if you do not use a network proxy

- Adjust the advanced settings to your needs, for instance if you have an IP overlap with the docker bridge

- If you modify the TANZU installer ports, make sure the HTTP and HTTPS port do not overlap.

After finishing the dialogue, I wait for the data upload to complete and power on the appliance (no screenshot here).

The appliance is ready when the reverse proxy is started. In my case this is usually two or three minutes after powering on the VM. Time may vary in your environment.

If you forgot which IP you specified or you are using DHCP, you can check the appliance IP in the vSphere client.

As a last step, I can point my browser to port 5556 and use the installer launched over HTTPS.

Summary

On a prepared environment, you can get started with TCE within minutes using the appliance without any modifications to your local workstation. Have a look at the GitHub Repo for TCE Demo appliance for future updates, report back if you find a bug (creat an issue) or consider a contribution if you want to improve things. Any other feedback is always appreciated via Twitter.

For setting up TCE management clusters there are already several blog posts available:

- Deploying Your First Tanzu Community Edition (TCE) Management Cluster by Mark Ukotic

- TCE Cluster on vSphere by Rudi Martinsen

- Introducing VMware Tanzu Community Edition (TCE) - Tanzu Kubernetes for everyone! by William Lam

All configuration files will be created on the appliance. You can SSH into the VM to use the tanzu CLI or copy the files off using scp.

Update 2022-07-07

After publishing my project I learned about the OVA Appliance for Tanzu Community Edition from Russell Hamker. It’s another amazing project, make sure to give it a spin as well.

Comments