This is the fourth part in a multi-part series about the automation of VMware vSphere template builds. A template is a pre-installed virtual machine that acts as master image for cloning virtual machines from it. In this series I am describing how to get started on automating the process. As I am writing these blog post while working on the implementation some aspects may seem “rough around the edges”.

- Part 1: Overview/Motivation

- Part 2: Building a pipeline for a MVP

- Part 3: An overview of packer

- Part 4: Concourse elements and extending the pipeline

The focus in this part are the concourse basics.

Overview

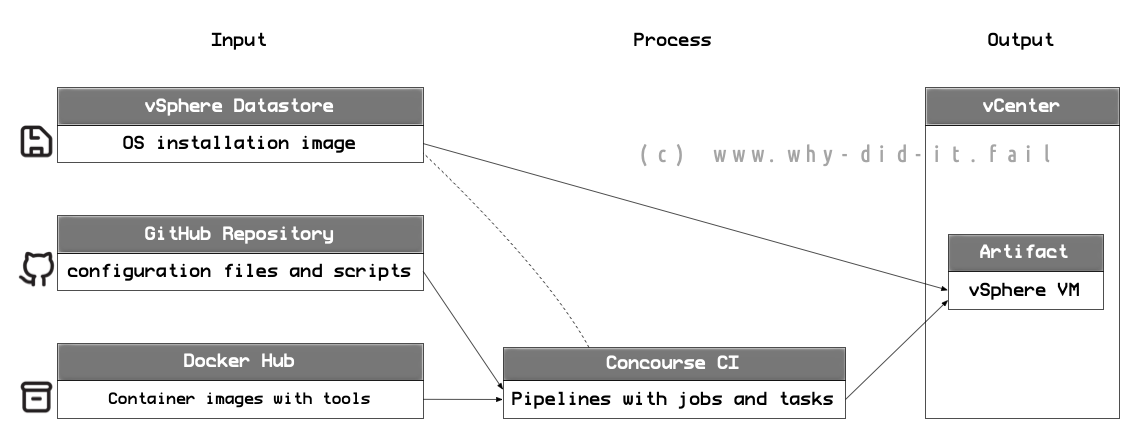

I opted for Concourse as it is open source, looks quite nice and has a declarative config that can be stored in a version control system. This blog post covers concourse CI elements that make up the pipeline. Knowing the basics about jobs, tasks and resources should give you a few ideas how to design a workflow that fits your needs. To make it more practical, the existing pipeline example will be extended later in this post.

If you didn’t do it already, part 1 describes the initial concourse setup with docker. I recommend the official documentation as well as this concourse tutorial for a more comprehensive coverage of concourse.

Example pipeline

As a short re-cap, here is what the example configuration for the pipeline in the file pipeline-ubuntu180403.yaml looks like now:

resources:

- name: packer-files

type: git

icon: github-circle

source:

uri: << YOUR GITHUB REPOSITORY WITH SSH ACCESS, e.g. [email protected]:username/repository.git >>

branch: master

private_key: ((github_private_key))

jobs:

- name: build-ubuntu-180403-docker

plan:

- get: packer-files

- task: create-image-with-packer

config:

platform: linux

image_resource:

type: docker-image

source:

repository: dzorgnotti/packer-jetbrains-vsphere

tag: latest

username: ((docker_hub_user))

password: ((docker_hub_password))

inputs:

- name: packer-files

outputs:

- name: packer-build-manifest

path: packer-files/packer/packer-manifest

run:

path: /bin/sh

dir: packer-files/packer

args:

- -exc

- |

packer build -force -timestamp-ui -var-file=common/common-vars.json ubuntu-180403-docker.json

Pipeline elements

A minimal pipeline consists of a single job and the whole pipeline configuration is stored in a yaml file. In a saner setup the minimum configuration would be the combination of a resource and at least one job. A more advanced pipeline consists of multiple jobs where each job passes down the transformed input as output to the next job.

D’oh! Now I tried to explain a term by introducing terms that need further explanation. Hang on, I will try to re-write it like this:

A pipeline allows you to build logical constructs to transform a given input into the desired end state (output) by executing a series of defined steps. Right now, I have opted for one pipeline for every template I want to build. While this can be optimized (a lot!) it also ensures that a change in my configuration just affects a single build process. Now, what are the pipeline elements?

Resources

The documentation puts it short and crisp:

Resources […] represent all external inputs to and outputs of jobs in the pipeline.

The example pipeline uses a resource of the type git which is a repository. The code should already give you a pretty good idea about the handling:

resources:

- name: packer-files

type: git

#[...]

- get: packer-files

#[...]

inputs:

- name: packer-files

In this example, the repository is pulled (get:) when the job starts and it is inserted (inputs:) into the execution environment (container) at runtime. As a result, each input resource is placed into a subfolder, using the resource`s name, in the working directory (entry point) of the container:

.

└── <resource-folder, e.g. packer-files>

└── <files and folders from the repository>

The whole concept is a rather nice way to separate the execution code from the data (packer configuration) which can reside in a version-controlled repository and is always pulled with the latest changes.

It is also possible to write something into a git repository as output. On top, there are many more options available to extend your pipeline.

Jobs

A job is an amount of work that is done according to a plan. To execute this plan, you may need resources as input (here: get: packer-files) and may execute tasks to provide results (here: task: create-image-with-packer). You can also hand over the results (here: outputs:) down the line or store them outside your pipeline in a resource.

Tasks

Tasks actually do things within a job and a job can contain more than one task. The execution environment for a task is usually a container:

config:

platform: linux

image_resource:

type: docker-image

source:

repository: dzorgnotti/packer-jetbrains-vsphere

tag: latest

username: ((docker_hub_user))

password: ((docker_hub_password))

In this example I am pulling an image from docker hub that I created for this purpose. Note: I recommend that you specify the tag for the docker image on an extra line as shown in my example. In my tests the usual notation of repo/image:tag ended in errors.

What is actually executed within the tasked is specified at the run mapping:

run:

path: /bin/sh

dir: packer-files/packer

args:

- -exc

- |

packer build -force -timestamp-ui -var-file=common/common-vars.json ubuntu-180403-docker.json

Here, I am running a shell (path: /bin/sh), change my working directory (dir: packer-files/packer) and provide the actual packer command as an argument (args:). The benefit of this approach is the direct visibility of what will happen when the task is executed. The downside is that this approach does not work well with more than a few lines of code. The alternative is to execute scripts. These can be part of your repository (e.g. as a git submodule) so that they will be pulled during the execution and can be change controlled as well.

Jobs vs. tasks

One of the key questions is “do I use a single job with multiple tasks, or I use multiple jobs with just one (a few) task(s)?”.

The answer to that is “it depends”.

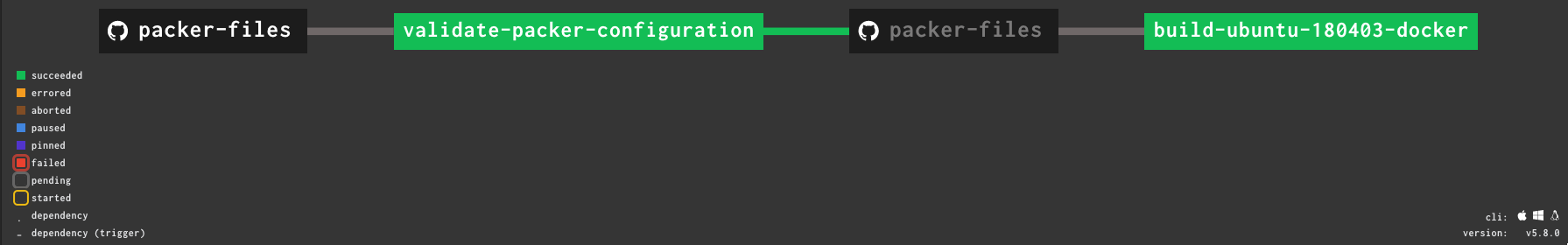

Having multiple jobs allows for a great visibility in the pipeline (each job is one of these nice boxes in the UI). It also provides you flexibility as you can work with logic constructs (e.g. on_success). Jobs can also be triggered independent of each other (if it makes actually sense depends on the job) but tasks cannot be triggered individually so that the whole job must be executed again.

Another aspect to consider is the additional complexity of multiple jobs for instance when handling output:

Between tasks you can the hand over the output of previous tasks to the current task by using outputs/inputs definitions and everything gets passed along when referencing the same name. In the example I already specified that the build job provides an output, it is the folder packer uses to write the build manifest after a successful run:

outputs:

- name: packer-build-manifest

path: packer-files/packer/packer-manifest

But: If you want to pass output between two jobs you need stateful storage (e.g. S3). It must be definied as a resource in the pipeline and the jobs must need to be instructed to get and put the files/directories in question.

Extending the pipeline

Armed with basic knowledge about the fundamental elements of concourse it is time to shift gears and extend the pipeline. This will include adding a new job as well extending the existing job with another task. Both elements will be very simple examples. The goal is to keep the complexity down for the sake of this post. The complete example is in a new branch on GitHub:

A new job to validate the configuration

The new job will do a code validation for the packer configuration and precede the existing build job. If the validation fails, the build job will not start - that simple. This is the additional code for the pipeline configuration:

[...]

jobs:

- name: validate-packer-configuration

plan:

- nearget: packer-files

- task: run-packer-validate

config:

platform: linux

image_resource:

type: docker-image

source:

repository: dzorgnotti/packer-jetbrains-vsphere

tag: latest

username: ((docker_hub_user))

password: ((docker_hub_password))

inputs:

- name: packer-files

run:

path: /bin/sh

dir: packer-files/packer

args:

- -exc

- |

packer validate -syntax-only -var-file=common/common-vars.json ubuntu-180403-docker.json

- name: build-ubuntu-180403-docker

plan:

- get: packer-files

trigger: true

passed: [validate-packer-configuration]

- task: create-image-with-packer

[...]

Apart from the name (name: validate-packer-configuration) the new job is nearly identical to the existing build job. It will execute a single task (task: run-packer-validate) with the same container image (repository: dzorgnotti/packer-jetbrains-vsphere) and resources (get: packer-files) but instead of telling packer to build something the validate command is called to run a syntax check (packer validate -syntax-only […]) on the configuration files.

The instruction to start the next job only when the validation is successful is specified in the second job as part of the build plan (trigger: true / passed: [validate-packer-configuration]).

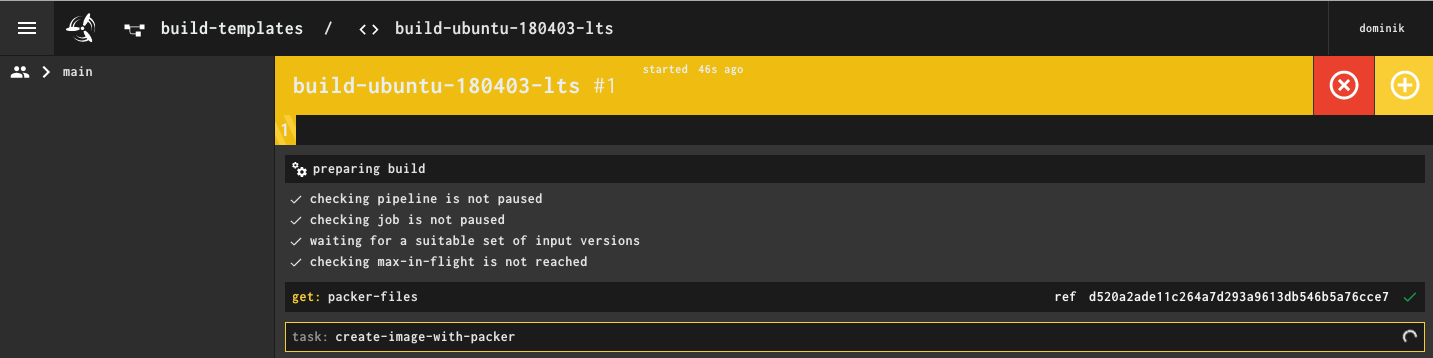

A new task for the build process to add some vSphere tags

To provide an example on how to make use of output/input I will extend the build job with another task:

The new task (task: add-vm-tags-with-govc) uses the packer manifest specified as output in the existing task (task: create-image-with-packer) as an input. Here is what the additional code for the pipeline looks like:

[...]

- task: add-vm-tags-with-govc

config:

platform: linux

image_resource:

type: docker-image

source:

repository: dzorgnotti/vmware-govc

tag: latest

username: ((docker_hub_user))

password: ((docker_hub_password))

params:

GOVC_URL: ((vcenter_host))

GOVC_USERNAME: ((vcenter_user))

GOVC_PASSWORD: ((vcenter_password))

GOVC_INSECURE: true

GOVC_DATACENTER: ((vcenter_datacenter))

inputs:

- name: packer-build-manifest # <<< the output of the previous task

- name: packer-files

run:

path: /bin/sh

args:

- -c

- |

./packer-files/scripts/govc-tagging.sh

This time another container image (repository: dzorgnotti/vmware-govc) is pulled from docker hub and two input resources are provided (packer-build-manifest, packer-files) for the runtime environment. The actual work is done in the run: section where a shell script is executed (/packer-files/scripts/govc-tagging.sh). The script adds vSphere tags based on the build information by calling govc. When finished, it looks like this:

Side rant: Why spend 10 minutes writing the code in PowerShell when you can spend hours with debugging bash scripts with their special character handling and other fun stuff (and this comes from a Linux guy).

Extra: Avoiding parallel build runs

In the final code example I added the serial parameter to the jobs, e.g. here:

- name: build-ubuntu-180403-docker

serial: true

If set to true it prevents parallel runs of the same job. In case of the build job you want to avoid parallelism and need to queue your runs one after another as you can have only one virtual machine with the same name at the same time.

Extra: Run after code commits to git

If you want to start the pipeline as soon as you check in code to your packer-file respository you can add the trigger statement to the first job:

jobs:

- name: validate-packer-configuration

serial: true

plan:

- get: packer-files

trigger: true

Shortly after a commit to the master branch the pipeline will automatically start and try to build a new image - just be careful with that.

Summary

I covered the basic elements of concourse and the pipeline has grown to multiple stages, already showing a bit of the power that comes from automation. For a corporate environment there are many more tasks that can and should be added, from messaging the status to more advanced tests. In part five I will try to improve the pipeline by moving the passwords from cleartext files to a solution that manages secrets for you.

Comments